Mon-Sun 9:00-21:00

MTT KUAE is Moore Threads Full-Stack Solution for AI Data Centers. It based on MTT S4000 GPU and the dual-processor 8-GPU server MCCX D800. This integrated solution tackles the challenges inherent in deploying large-scale GPU computing power with efficiency and effectiveness.

Ready-to-Use Integrated Solution

MTT KUAE full-stack solution is built on Moore Threads' Universal GPUs and seamlessly integrates hardware and software. MTT KUAE Platform for cluster management and MTT KUAE Model Studio for accessing model services fully support MTT KUAE to achieve maximum optimization. This end-to-end solution greatly simplifies the deployment and operation of large-scale GPU computational infrastructure.

Core Features

MTT KUAE full-stack solution fully leverages the advantages of Moore Threads GPUs.

Product Portfolio

Core Components of MTT KUAE

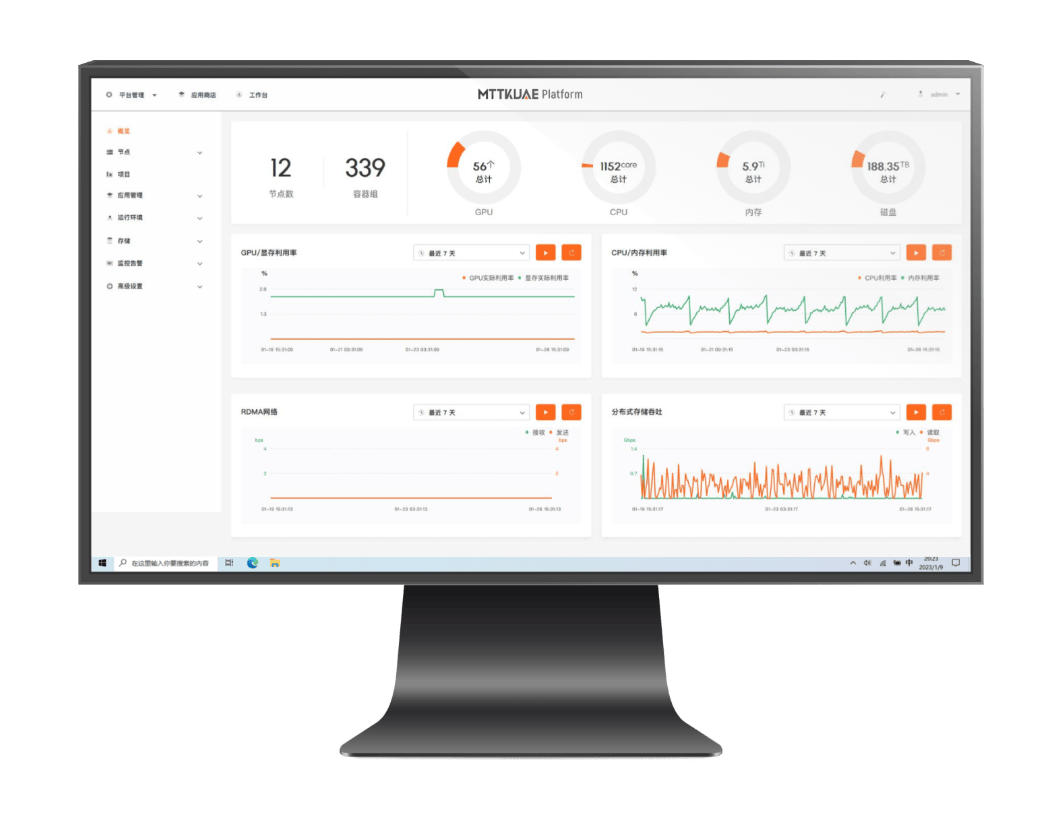

MTT KUAE Platform

This platform integrates hardware and software for AI large model training, distributed graphics rendering, stream-media processing, and computational science. Featuring Universal GPU computation, networking, and storage integration, it delivers highly reliable, high-performance computing services.

It offers flexible management of multi-data centers and multi-cluster computational resources, supported by multi-dimensional operational monitoring, alerts, and logging systems, empowering artificial intelligence data centers to achieve operational and maintenance automation.

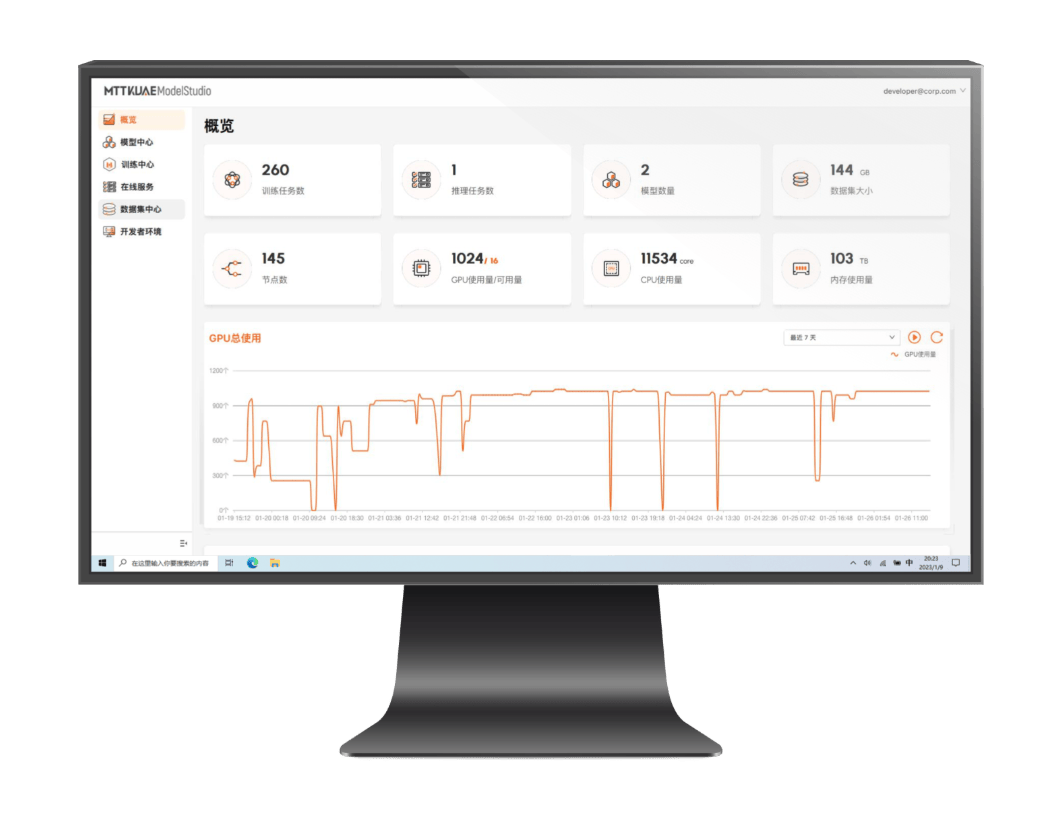

MTT KUAE ModelStudio

Covers pre-training, fine-tuning, and inference for all major open-source large models.

Using the MUSIFY tool, developers can effortlessly adapt their GPU resources to the MUSA architecture and deploy language model services with one-click containerization.

This suite of tools covers management for the whole large model lifecycle with an intuitive interface, enabling workflow organization and lowering the entry barrier to using large models.

Key Features of MTT KUAE

Modular design of large-scale GPU computing power with flexible deployment

Optimization of the linear speedup ratio of GPU computing power

Deployment of a high-speed parameter transmission network

Deployment and scheduling of heterogeneous computing clusters

Design and deployment of a computational power service support system

Scheduling of elastic computing power for cloud-native GPU clusters

Reliability and security of computing and storage

Highly reliable automatic problem diagnosis and recovery

中文

中文