Mon-Sun 9:00-21:00

LLM Training and Finetuning

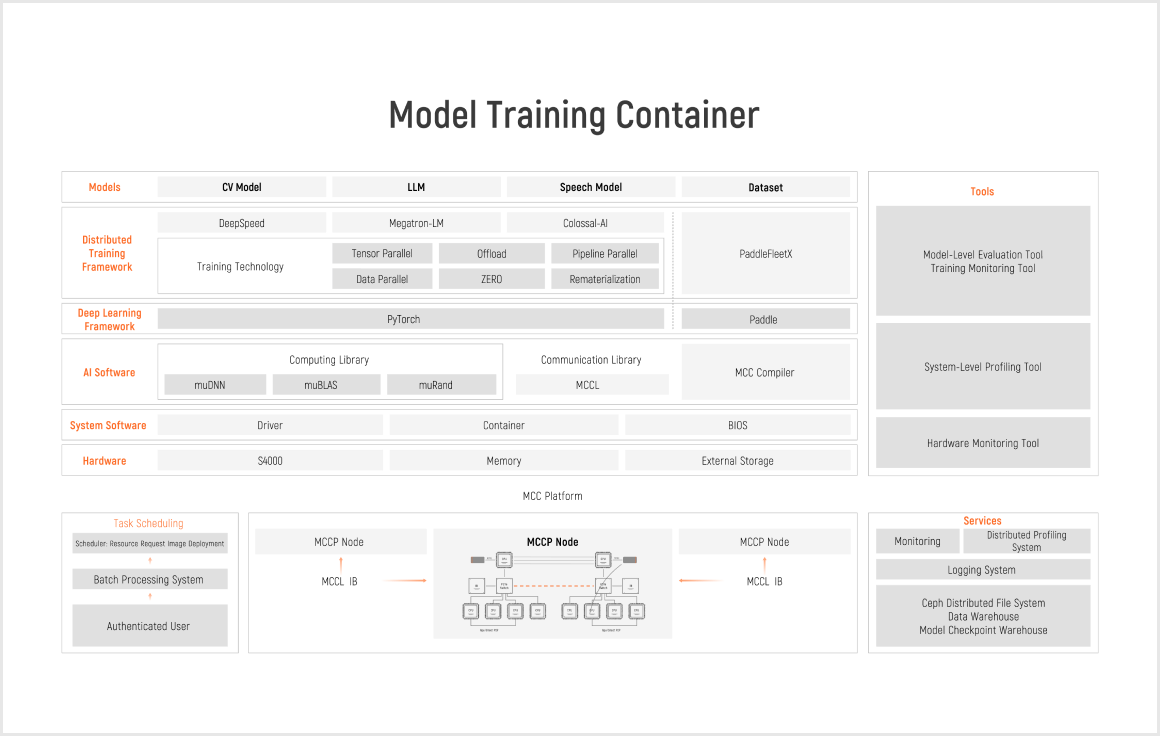

Training Platform Architecture

Moore Threads' large model training platform is fully compatible with CUDA and PyTorch. It supports distributed training frameworks such as Megatron-LM, DeepSpeed, FSDP, and Colossal-AI. Fully compatible and with high performance and flexibility, the platform is ideal for easily training mainstream large models such as GPTs, LLaMa, and GLMs with just one click. Using the KUAE thousand-GPU computing cluster for large model training, the linear speedup ratio exceeds 91%. In addition to supporting development, it also supports training supervision, automatic restart, and resumption.

Training and Finetuning Examples

MTT S4000 features 128 Tensor Cores, 48 GB of memory, and ultra-fast inter-card communication enabled by MTLink. It supports training for various LLMs, including LLaMa, ChatGPT, ChatGLM, Qwen, and Baichuan through Moore Threads' training platform. With distributed training strategies for single and multi-node systems, it accelerates the training and finetuning of LLMs with 60 to 100 billion parameters.

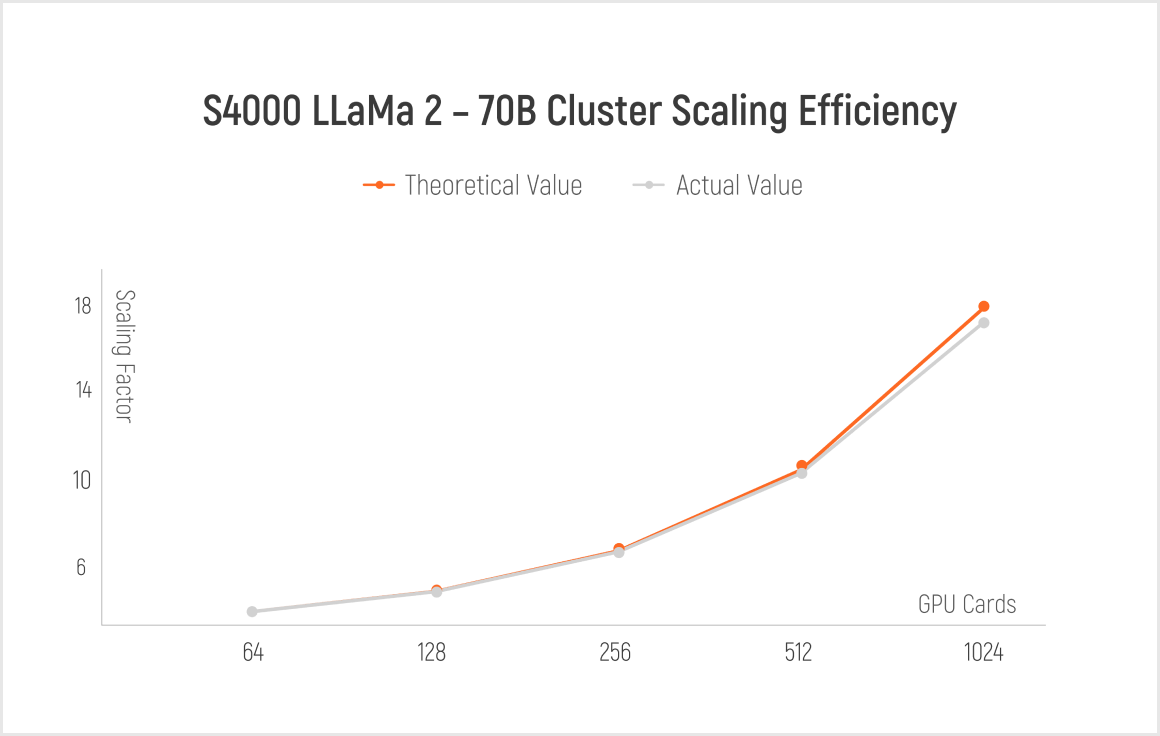

Cluster Scaling Efficiency

The Moore Threads KUAE Computing Cluster platform, designed for billion-parameter model pretraining, finetuning, and inference, achieves a 91% linear speedup ratio in a thousand-card cluster. The platform is optimized across applications, distributed systems, training frameworks, communication libraries, firmware, operators, and hardware. Featuring MTLink, a proprietary interconnect technology based on the architecture of MTT S4000, the platform supports MTLink Bridge connections between two, four and eight cards. MTLink achieves an inter-card bandwidth of up to 240 GB/s. This accelerates training speeds in clusters with 64 to 1024 cards and improves the linearity of multi-card interconnects.

Large Model Inference Service Platform

MTT S4000, equipped with 128 Tensor Cores and 48 GB of memory, effectively supports inference for mainstream LLMs, such as LLaMa, ChatGLM, Qwen, and Baichuan.

Supporting KUAE Cluster Products

MTT KUAE is Moore Threads' full-stack solution for artificial intelligence data centers. It is based on the S4000 GPU and MCCX D800 all-in-one cluster computing unit, which is equipped with eight dual-processor S4000 GPUs. This integrated solution tackles the challenges inherent in deploying large-scale GPU computing power with efficiency and effectiveness.

Advanced-Generation Tensor

Moore Threads' advanced-generation Tensor Cores assist in the training, finetuning, and inference of LLMs. MTT S4000 includes 8,192 vector cores and 128 Tensor Cores. It supports mainstream precision computing formats such as FP64, FP32, TF32, FP16, BF16, and INT8.

Third-Generation MUSA Software Stack

MUSA is Moore Threads' self-developed metacomputing unified architecture, which includes an instruction set architecture, MUSA programming model, driver, runtime library, operator library, communication library, and mathematical library. CUDA programs can be smoothly migrated to MUSA through Moore Threads' self-developed MUSIFY tool.

Fully Supports Mainstream Graphics APIs

MTT S4000 supports mainstream graphics APIs such as DirectX, Vulkan, OpenGL, and OpenGL ES. It provides versatile graphics rendering for a variety of scenarios, including digital twins, cloud gaming, cloud rendering, and content creation. It also supports large model inference capabilities, functioning as a one-stop solution for multi-modal scenarios like AIGC.

中文

中文